AD-ViT: Attribute De-biased Vision Transformer for Long-Term Person Re-identification

AD-ViT: Attribute De-biased Vision Transformer for Long-Term Person Re-identification

Abstract

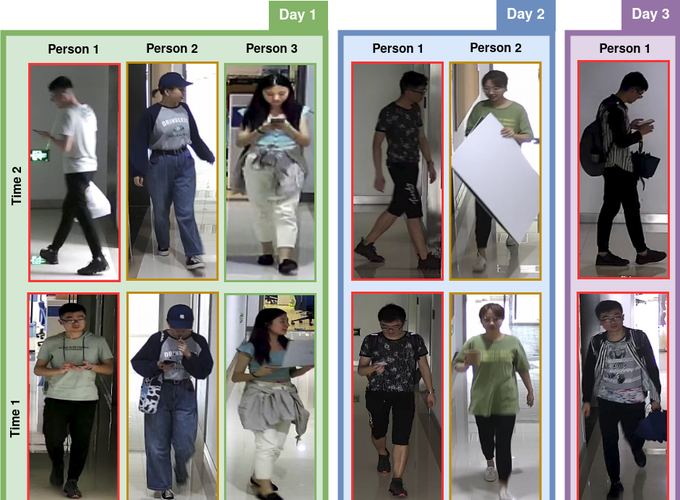

Person re-identification (re-ID) aims to retrieve images of the same identity from a gallery of person images across cameras and viewpoints. However, most works in person re-ID assume a short-term setting characterized by invariance in appearance. In contrast, a high visual variance can be frequently seen in a long-term setting due to changes in apparel and accessories, which makes the task more challenging. Therefore, learning identity-specific features agnostic of temporally variant features is crucial for robust long-term person Re-ID. To this end, we propose an Attribute De-biased Vision Transformer (AD-ViT) to provide direct supervision to learn identity-specific features. Specifically, we produce attribute labels for person instances and utilize them to guide our model to focus on identity features through gradient reversal. Our experiments on two long-term re-ID datasets - LTCC and NKUP show that the proposed work consistently outperforms current state-of-the-art methods.