Deep metric learning for computer vision: A brief overview

Deep metric learning for computer vision: A brief overview

Abstract

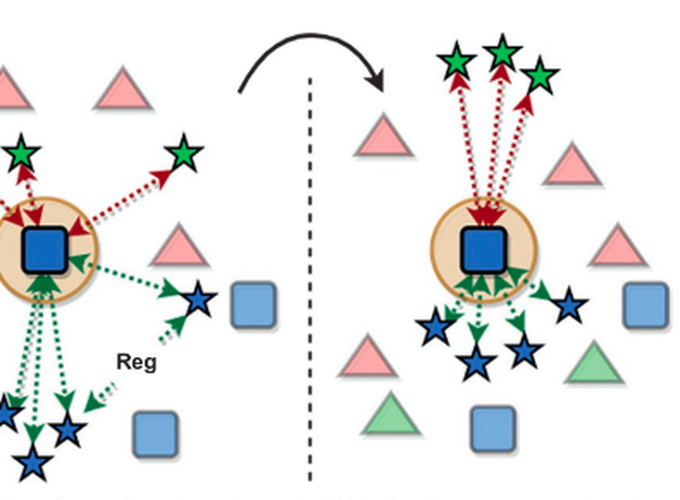

Objective functions that optimize deep neural networks play a vital role in creating an enhanced feature representation of the input data. Although cross-entropy-based loss formulations have been extensively used in a variety of supervised deep-learning applications, these methods tend to be less adequate when there is large intraclass variance and low interclass variance in input data distribution. Deep metric learning seeks to develop methods that aim to measure the similarity between data samples by learning a representation function that maps these data samples into a representative embedding space. It leverages carefully designed sampling strategies and loss functions that aid in optimizing the generation of a discriminative embedding space even for distributions having low interclass and high intraclass variances. In this chapter, we will provide an overview of recent progress in this area and discuss state-of-the-art deep metric learning approaches.