Conditional Neural Aggregation Network For Unconstrained Long Range Biometric Feature Fusion

Abstract

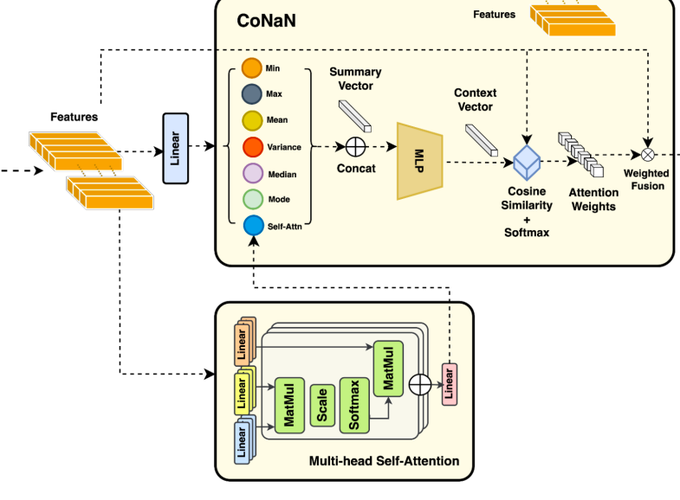

Person recognition from image sets acquired under unregulated and uncontrolled settings, such as at large distances, low resolutions, varying viewpoints, illumination, pose, and atmospheric conditions, is challenging. Feature aggregation, which involves aggregating a set of N feature representations present in a template into a single global representation, plays a pivotal role in such recognition systems. Existing works in traditional face feature aggregation either utilize metadata or high-dimensional intermediate feature representations to estimate feature quality for aggregation. However, generating high-quality metadata or style information is not feasible for extremely low-resolution faces captured in long-range and high altitude settings. To overcome these limitations, we propose a feature distribution conditioning approach called CoNAN for template aggregation. Specifically, our method aims to learn a context vector conditioned over the distribution information of the incoming feature set, which is utilized to weigh the features based on their estimated informativeness. The proposed method produces state-of-the-art results on long-range unconstrained face recognition datasets such as BTS, and DroneSURF, validating the advantages of such an aggregation strategy.We show that CoNAN generalizes present CoNANs results on other modalities such as body features and gait. We also produce extensive qualitative and quantitative experiments on different components of CoNAN.