Abstract

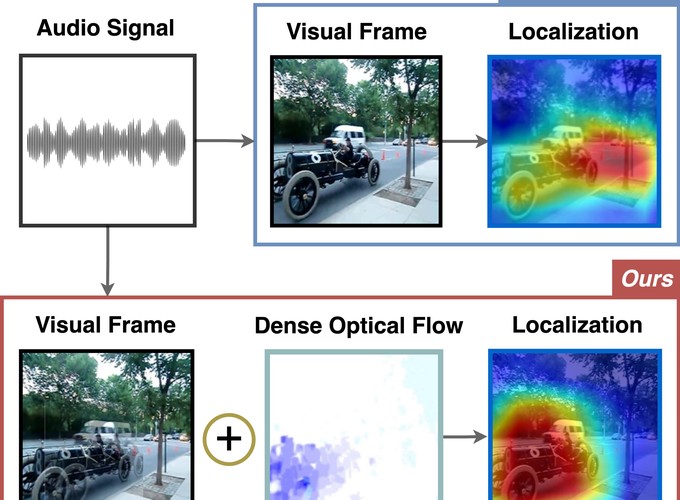

Learning to localize the sound source in videos with- out explicit annotations is a novel area of audio-visual research. Existing work in this area focuses on creating atten- tion maps to capture the correlation between the two modal- ities to localize the source of the sound. In a video, often times, the objects exhibiting movement are the ones generating the sound. In this work, we capture this characteristic by modeling the optical flow in a video as a prior to better aid in localizing the sound source. We further demonstrate that the addition of flow-based attention substantially im- proves visual sound source localization. Finally, we benchmark our method on standard sound source localization datasets and achieve state-of-the-art performance on the Soundnet Flickr and VGG Sound Source datasets. Code: https://github.com/denfed/heartheflow